Eye into AI: Evaluating the Interpretability of Explainable AI Techniques through a Game With a Purpose

Published at

CSCW

| Minneapolis, MN

2023

Abstract

Recent developments in explainable AI (XAI) aim to improve the transparency of

black-box models. However, empirically evaluating the interpretability of these

XAI techniques is still an open challenge. The most common evaluation method is

algorithmic performance, but such an approach may not accurately represent how

interpretable these techniques are to people. A less common but growing

evaluation strategy is to leverage crowd-workers to provide feedback on multiple

XAI techniques to compare them. However, these tasks often feel like work and

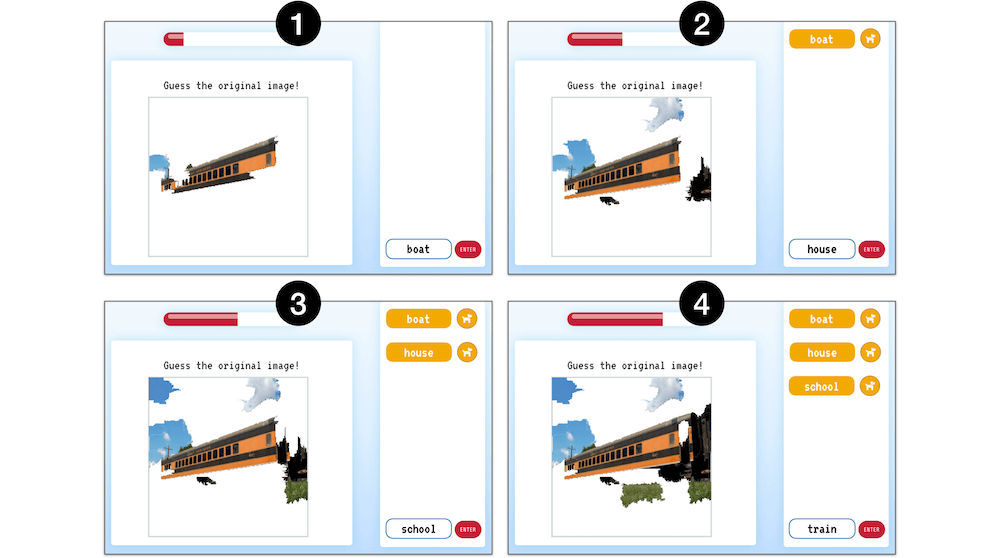

may limit participation. We propose a novel, playful, human-centered method for

evaluating XAI techniques: a Game With a Purpose (GWAP), Eye into AI, that

allows researchers to collect human evaluations of XAI at scale. We provide an

empirical study demonstrating how our GWAP supports evaluating and comparing the

agreement between three popular XAI techniques (LIME, Grad-CAM, and Feature

Visualization) and humans, as well as evaluating and comparing the

interpretability of those three XAI techniques applied to a deep learning model

for image classification. The data collected from Eye into AI offers convincing

evidence that GWAPs can be used to evaluate and compare XAI techniques.