What Did My AI Learn? How Data Scientists Make Sense of Model Behavior

Published at

TOCHI

2022

Abstract

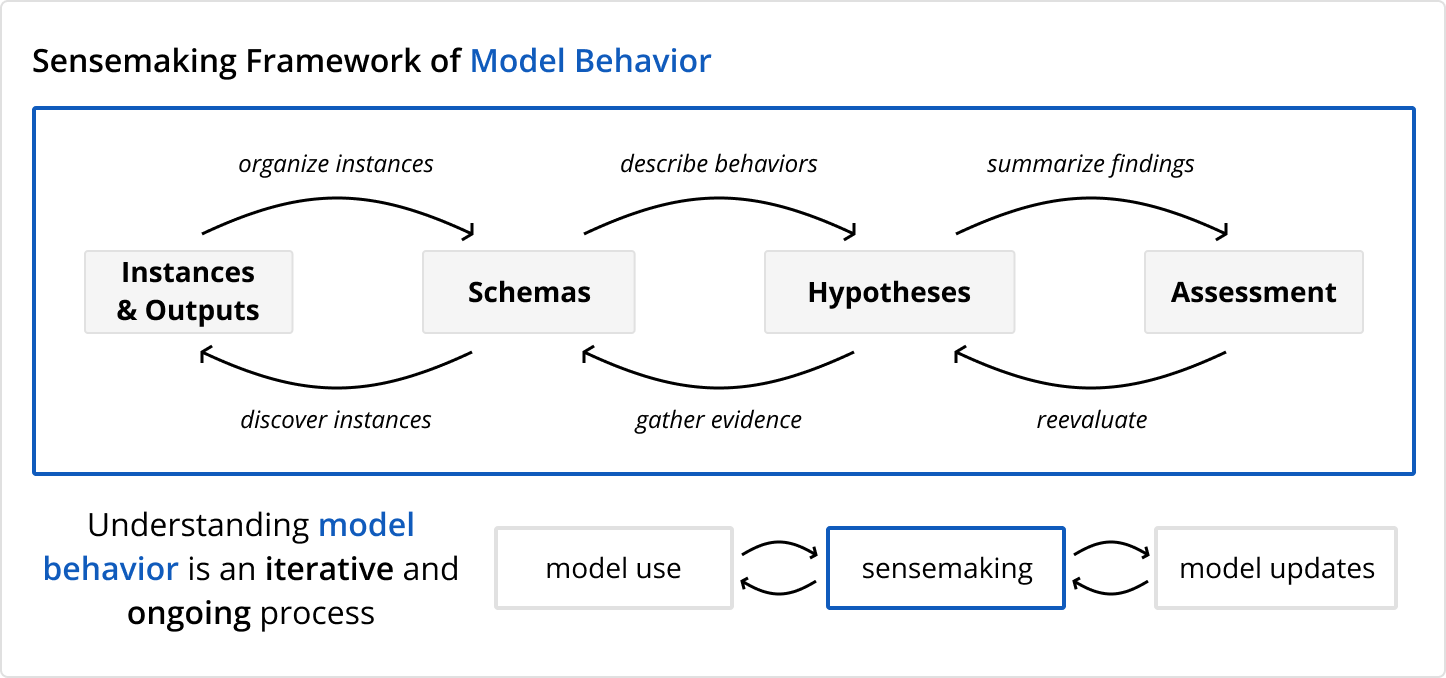

Data scientists require rich mental models of how AI systems behave to

effectively train, debug, and work with them. Despite the prevalence of AI

analysis tools, there is no general theory describing how people make sense of

what their models have learned. We frame this process as a form of sensemaking

and derive a framework describing how data scientists develop mental models of

AI behavior. To evaluate the framework, we show how existing AI analysis tools

fit into this sensemaking process and use it to design AIFinnity, a system for

analyzing image-and-text models. Lastly, we explored how data scientists use a

tool developed with the framework through a think-aloud study with 10 data

scientists tasked with using AIFinnity to pick an image captioning model. We

found that AIFinnity's sensemaking workflow reflected participants' mental

processes and enabled them to discover and validate diverse AI behaviors.