Improving Human-AI Partnerships in Child Welfare: Understanding Worker Practices, Challenges, and Desires for Algorithmic Decision Support

Anna Kawakami

Hao-Fei Cheng

Logan Stapleton

Yanghuidi Cheng

Diana Qing

Zhiwei Steven Wu

Haiyi Zhu

Published at

CHI

| New Orleans, LA

2022

- Best Paper Honorable Mention

Abstract

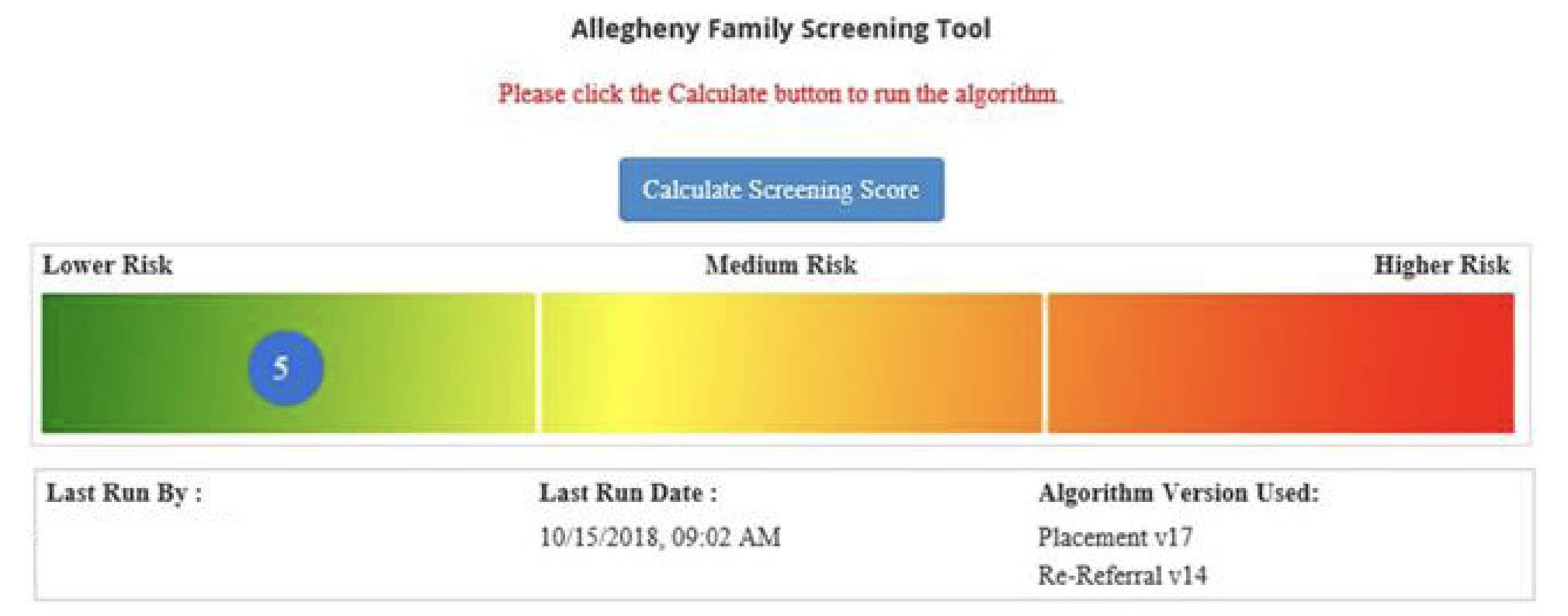

AI-based decision support tools (ADS) are increasingly used to augment human

decision-making in high-stakes, social contexts. As public sector agencies begin

to adopt ADS, it is critical that we understand workers' experiences with these

systems in practice. In this paper, we present findings from a series of

interviews and contextual inquiries at a child welfare agency, to understand how

they currently make AI-assisted child maltreatment screening decisions. Overall,

we observe how workers' reliance upon the ADS is guided by (1) their knowledge

of rich, contextual information beyond what the AI model captures, (2) their

beliefs about the ADS's capabilities and limitations relative to their own, (3)

organizational pressures and incentives around the use of the ADS, and (4)

awareness of misalignments between algorithmic predictions and their own

decision-making objectives. Drawing upon these findings, we discuss design

implications towards supporting more effective human-AI decision-making.