Characterizing Human Explanation Strategies to Inform the Design of Explainable AI for Building Damage Assessment

Published at

AI for HADR at NeurIPS

2021

Abstract

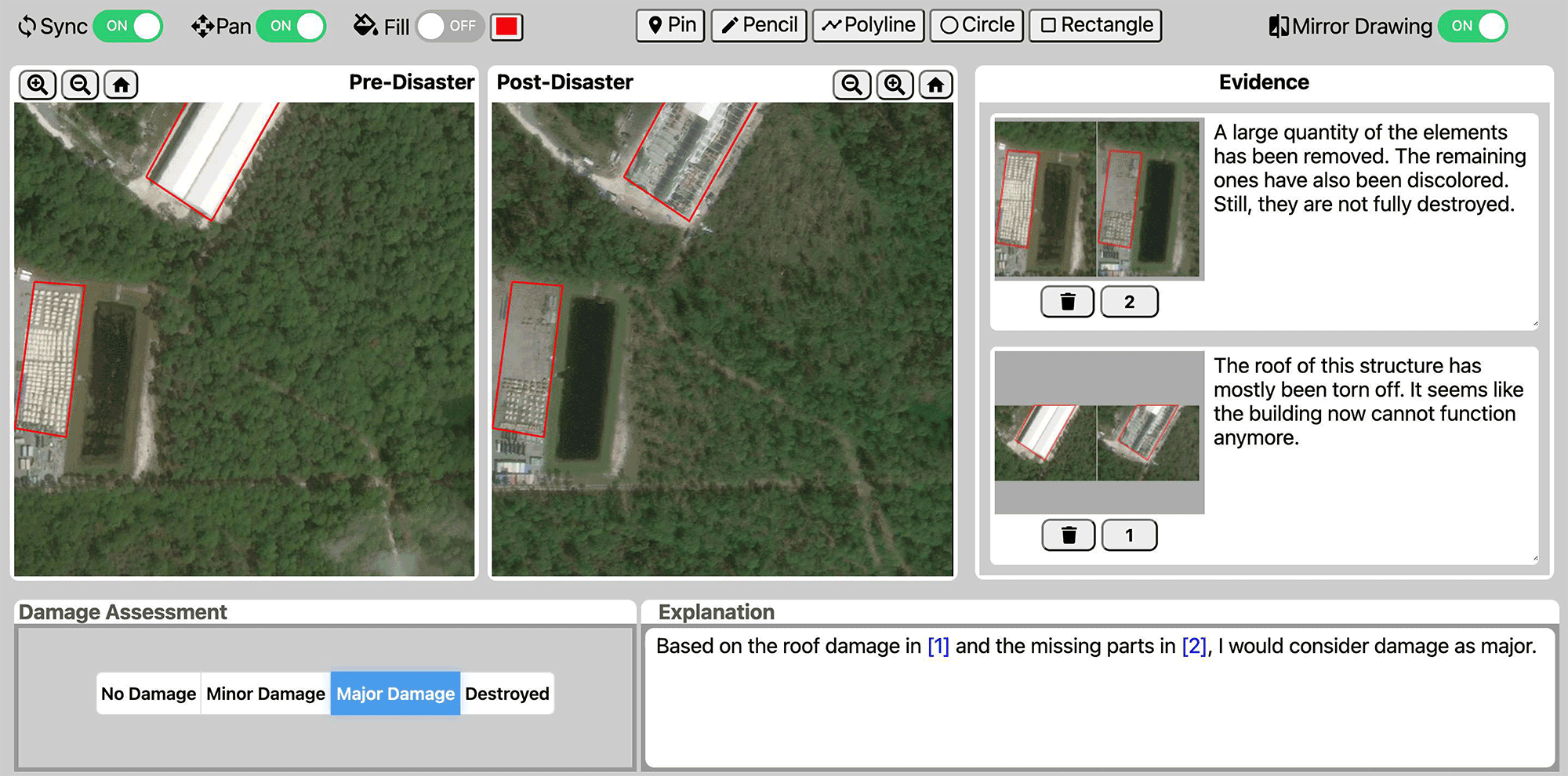

Explainable AI (XAI) is a promising means of supporting human-AI collaborations

for high-stakes visual detection tasks, such as damage detection tasks from

satellite imageries, as fully-automated approaches are unlikely to be perfectly

safe and reliable. However, most existing XAI techniques are not informed by the

understandings of task-specific needs of humans for explanations. Thus, we took

a first step toward understanding what forms of XAI humans require in damage

detection tasks. We conducted an online crowdsourced study to understand how

people explain their own assessments, when evaluating the severity of building

damage based on satellite imagery. Through the study with 60 crowdworkers, we

surfaced six major strategies that humans utilize to explain their visual damage

assessments. We present implications of our findings for the design of XAI

methods for such visual detection contexts, and discuss opportunities for future

research.