Discovering and Validating AI Errors With Crowdsourced Failure Reports

Published at

CSCW

2021

Abstract

AI systems can fail to learn important behaviors, leading to real-world issues

like safety concerns and biases. Unfortunately, discovering these systematic

failures often requires significant developer attention, from hypothesizing

potential edge cases to collecting evidence and validating patterns. To scale

and streamlinethis process, we introduce failure reports, end-user descriptions

of how or why a model failed, and show how developers can use them to detect AI

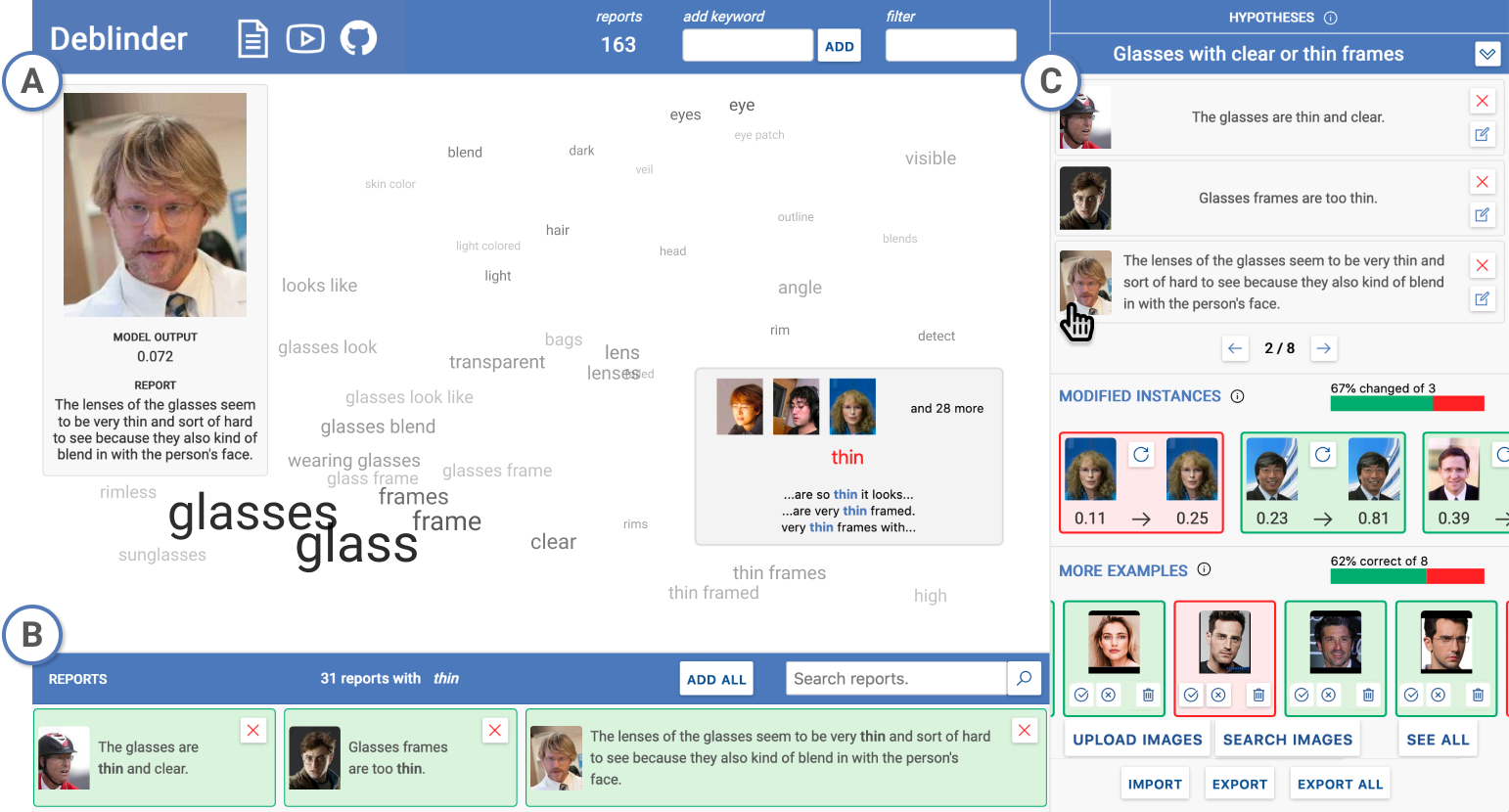

errors. We also design and implement Deblinder, a visual analytics system for

synthesizing failure reports that developers can use to discover and validate

systematic failures. In semi-structured interviews and think-aloud studies with

10 AI practitioners, we explore the affordances of the Deblindersystem and the

applicability of failure reports in real-world settings. Lastly, we show how

collecting additional data from the groups identified by developers can improve

model performance.