Regularizing Black-box Models for Improved Interpretability

Published at

NeurIPS

| Vancouver

2020

Abstract

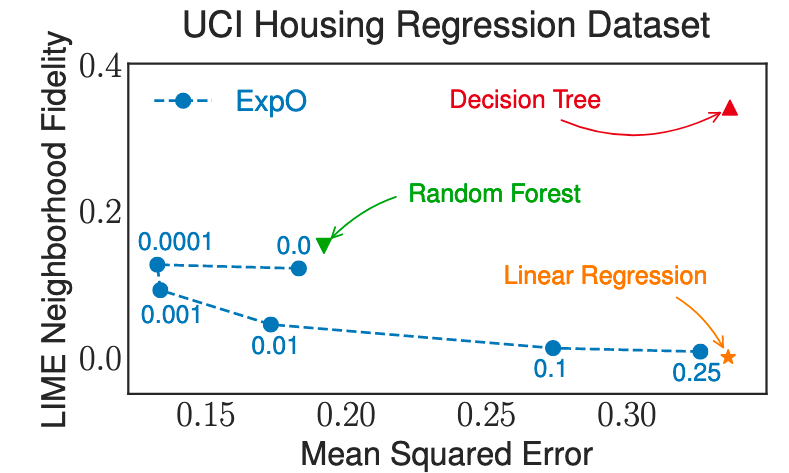

Most of the work on interpretable machine learning has focused on designing

either inherently interpretable models, which typically trade-off accuracy for

interpretability, or post-hoc explanation systems, whose explanation quality can

be unpredictable. Our method, ExpO, is a hybridization of these approaches that

regularizes a model for explanation quality at training time. Importantly, these

regularizers are differentiable, model agnostic, and require no domain knowledge

to define. We demonstrate that post-hoc explanations for ExpO-regularized models

have better explanation quality, as measured by the common fidelity and

stability metrics. We verify that improving these metrics leads to significantly

more useful explanations with a user study on a realistic task.