Designing Alternative Representations of Confusion Matrices to Support Non-Expert Public Understanding of Algorithm Performance

Published at

CSCW

| Miami

2020

Abstract

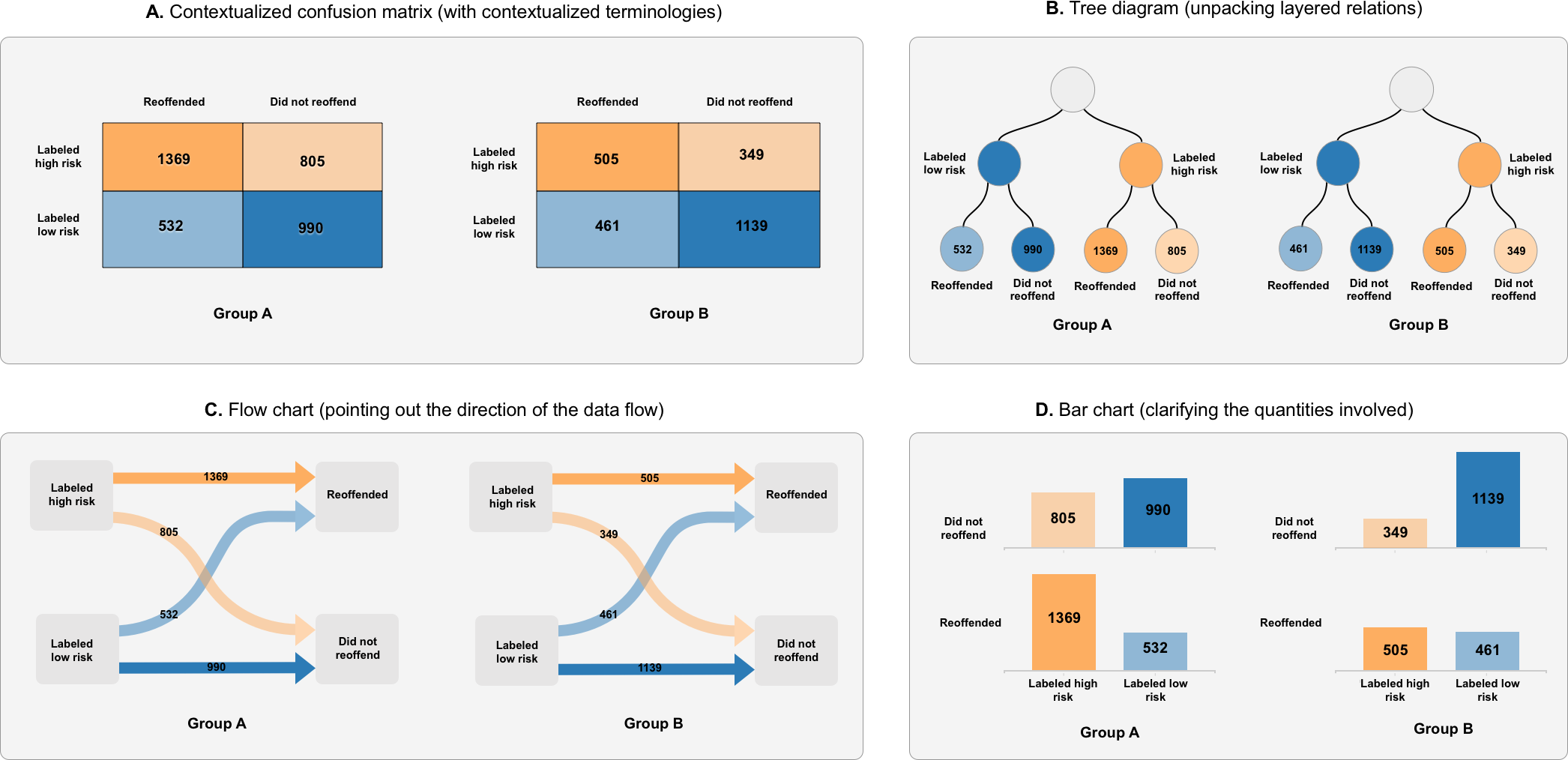

Ensuring effective public understanding of algorithmic decisions that are

powered by machine learning techniques has become an urgent task with the

increasing deployment of AI systems into our society. In this work, we present a

concrete step toward this goal by redesigning confusion matrices for binary

classification to support non-experts in understanding the performance of

machine learning models. Through interviews (n=7) and a survey (n=102), we

mapped out two major sets of challenges lay people have in understanding

standard confusion matrices: the general terminologies and the matrix design. We

further identified three sub-challenges regarding the matrix design, namely,

confusion about the direction of reading the data, layered relations and

quantities involved. We then conducted an online experiment with 483

participants to evaluate how effective a series of alternative representations

target each of those challenges in the context of an algorithm for making

recidivism predictions. We developed three levels of questions to evaluate

users' objective understanding. We assessed the effectiveness of our

alternatives for accuracy in answering those questions, completion time, and

subjective understanding. Our results suggest that (1) only by contextualizing

terminologies can we significantly improve users' understanding and (2) flow

charts, which help point out the direction of reading the data, were most useful

in improving objective understanding. Our findings set the stage for developing

more intuitive and generally understandable representations of the performance

of machine learning models.