Ablate, Variate, and Contemplate: Visual Analytics for Discovering Neural Architectures

Published at

VAST

| Vancouver, Canada

2019

Abstract

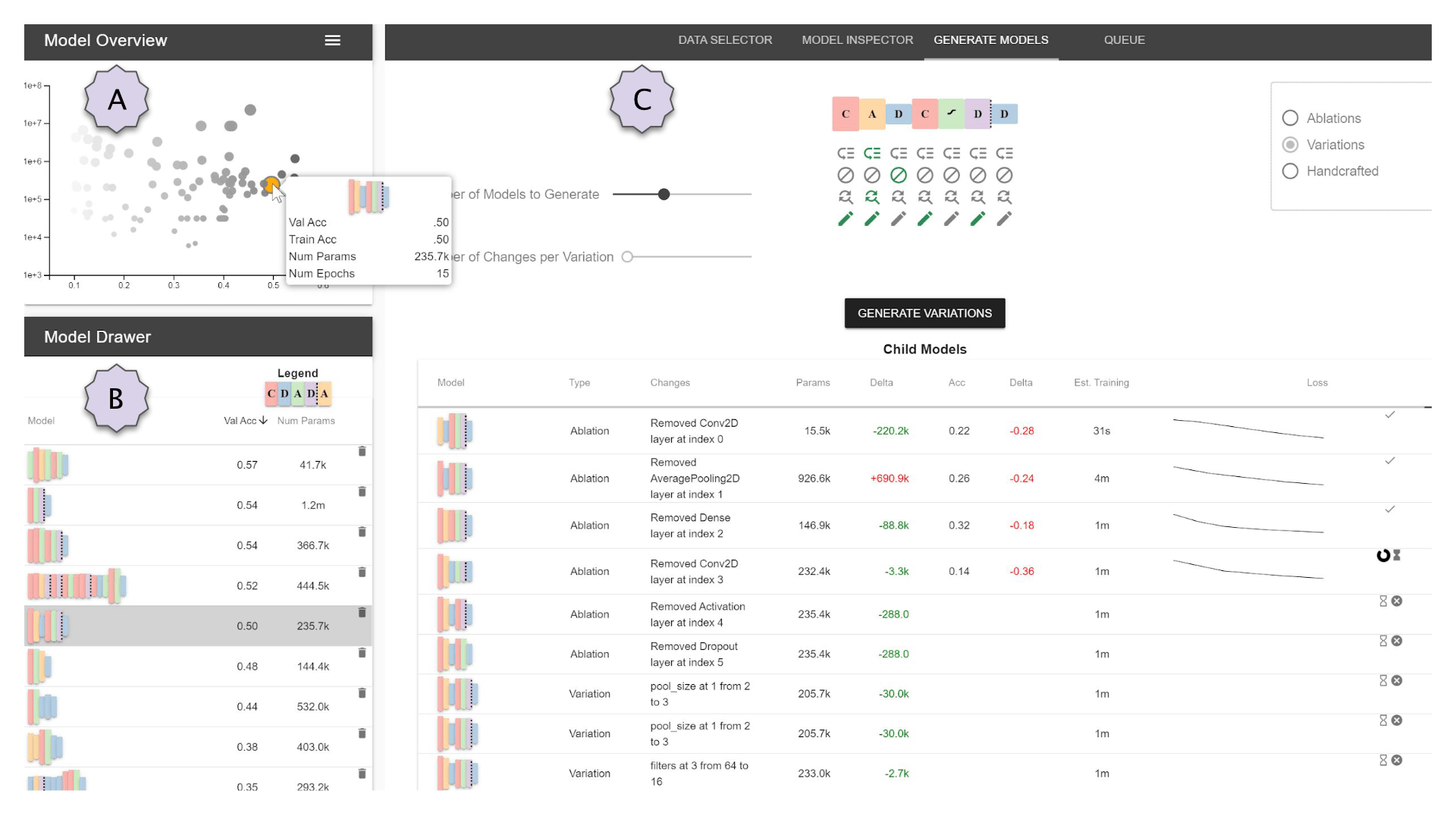

The performance of deep learning models is dependent on the precise

configuration of many layers and parameters. However, there are currently few

systematic guidelines for how to configure a successful model. This means model

builders often have to experiment with different configurations by manually

programming different architectures (which is tedious and time consuming) or

rely on purely automated approaches to generate and train the architectures

(which is expensive). In this paper, we present Rapid Exploration of Model

Architectures and Parameters, or REMAP, a visual analytics tool that allows a

model builder to discover a deep learning model quickly via exploration and

rapid experimentation of neural network architectures. In REMAP, the user

explores the large and complex parameter space for neural network architectures

using a combination of global inspection and local experimentation. Through a

visual overview of a set of models, the user identifies interesting clusters of

architectures. Based on their findings, the user can run ablation and variation

experiments to identify the effects of adding, removing, or replacing layers in

a given architecture and generate new models accordingly. They can also

handcraft new models using a simple graphical interface. As a result, a model

builder can build deep learning models quickly, efficiently, and without manual

programming. We inform the design of REMAP through a design study with four deep

learning model builders. Through a use case, we demonstrate that REMAP allows

users to discover performant neural network architectures efficiently using

visual exploration and user-defined semi-automated searches through the model

space.