MAST: A Tool for Visualizing CNN Model Architecture Searches

Published at

Debugging Machine Learning Models at ICLR

| New Orleans, LA

2019

Abstract

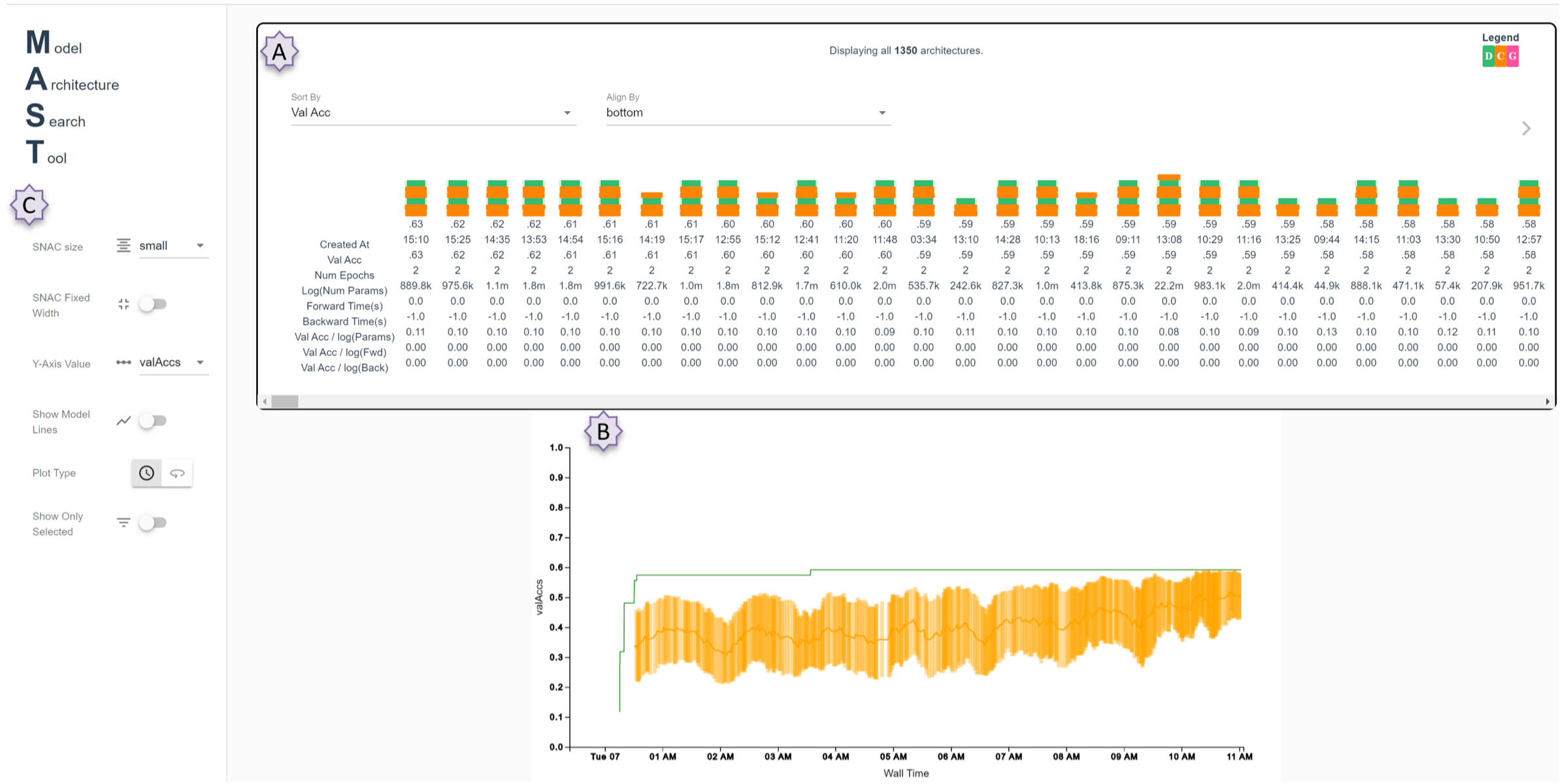

Any automated search over a model space uses a large amount of resources to

ultimately discover a small set of performant models. It also produces large

amounts of data, including the training curves and model information for

thousands of models. This expensive process may be wasteful if the automated

search fails to find better models over time, or if promising models are

prematurely disposed of during the search. In this work, we describe a visual

analytics tool used to explore the rich data that is generated during a search

for feed forward convolutional neural network model architectures. A visual

overview of the training process lets the user verify assumptions about the

architecture search, such as an expected improvement in sampled model

performance over time. Users can select subsets of architectures from the model

overview and compare their architectures visually to identify patterns in layer

subsequences that may be causing poor performance. Lastly, by viewing loss and

training curves, and by comparing the number of parameters of subselected

architectures, users can interactively select a model with more control than

provided by an automated metalearning algorithm. We present screenshots of our

tool on three different metalearning algorithms on CIFAR-10, and outline future

directions for applying visual analytics to architecture search.