Interacting with Predictions: Visual Inspection of Black-box Machine Learning Models

Published at

CHI

| Phoenix, AZ

2016

Abstract

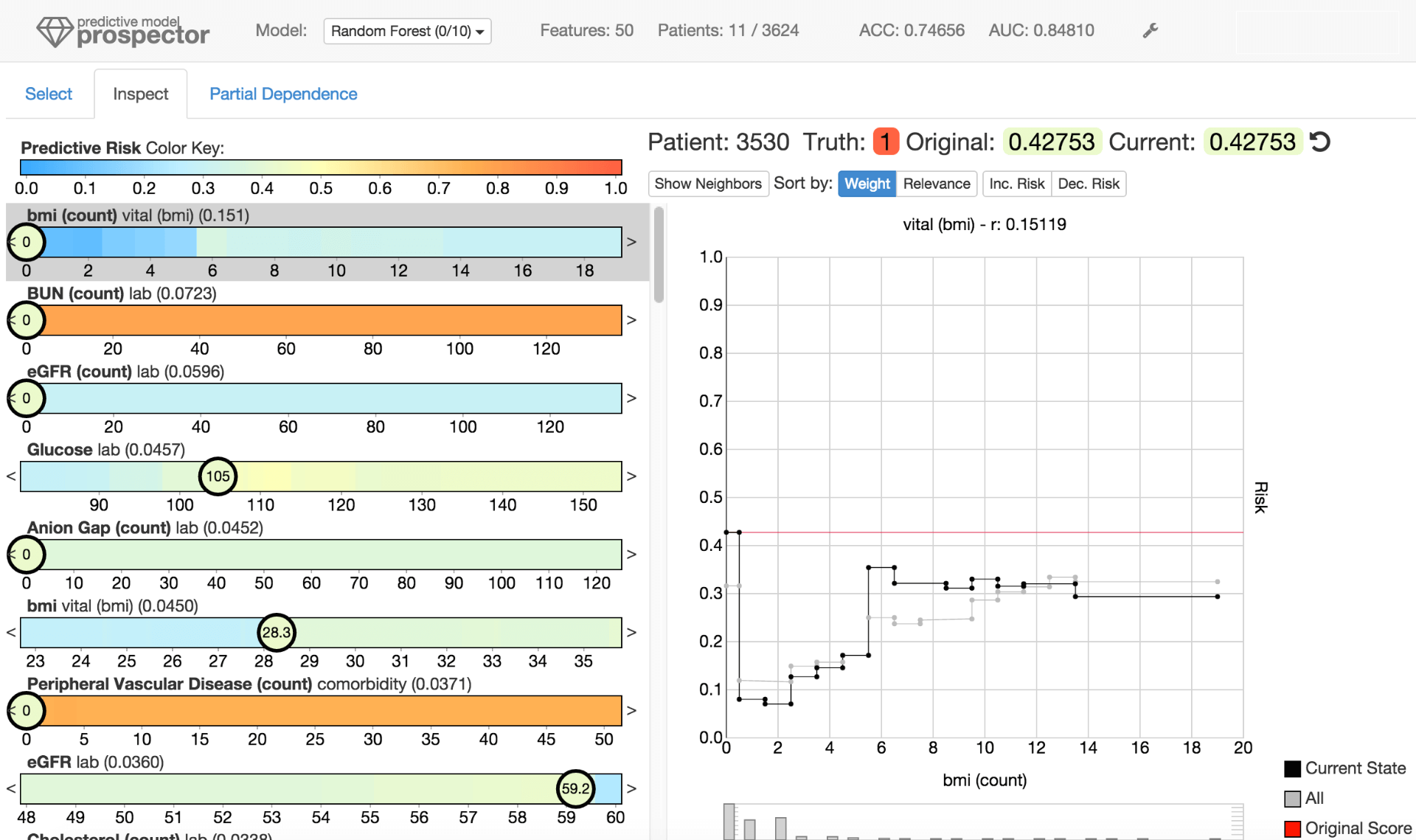

Understanding predictive models, in terms of interpreting and identifying

actionable insights, is a challenging task. Often the importance of a feature in

a model is only a rough estimate condensed into one number. However, our

research goes beyond these naïve estimates through the design and implementation

of an interactive visual analytics system, Prospector. By providing interactive

partial dependence diagnostics, data scientists can understand how features

affect the prediction overall. In addition, our support for localized inspection

allows data scientists to understand how and why specific datapoints are

predicted as they are, as well as support for tweaking feature values and seeing

how the prediction responds. Our system is then evaluated using a case study

involving a team of data scientists improving predictive models for detecting

the onset of diabetes from electronic medical records.